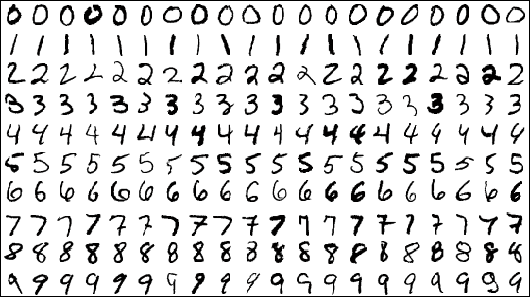

Multilayer Perceptron on MNIST Handwritten Data

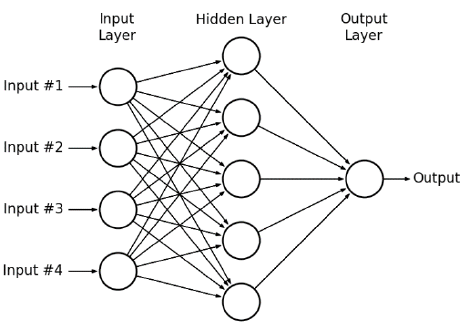

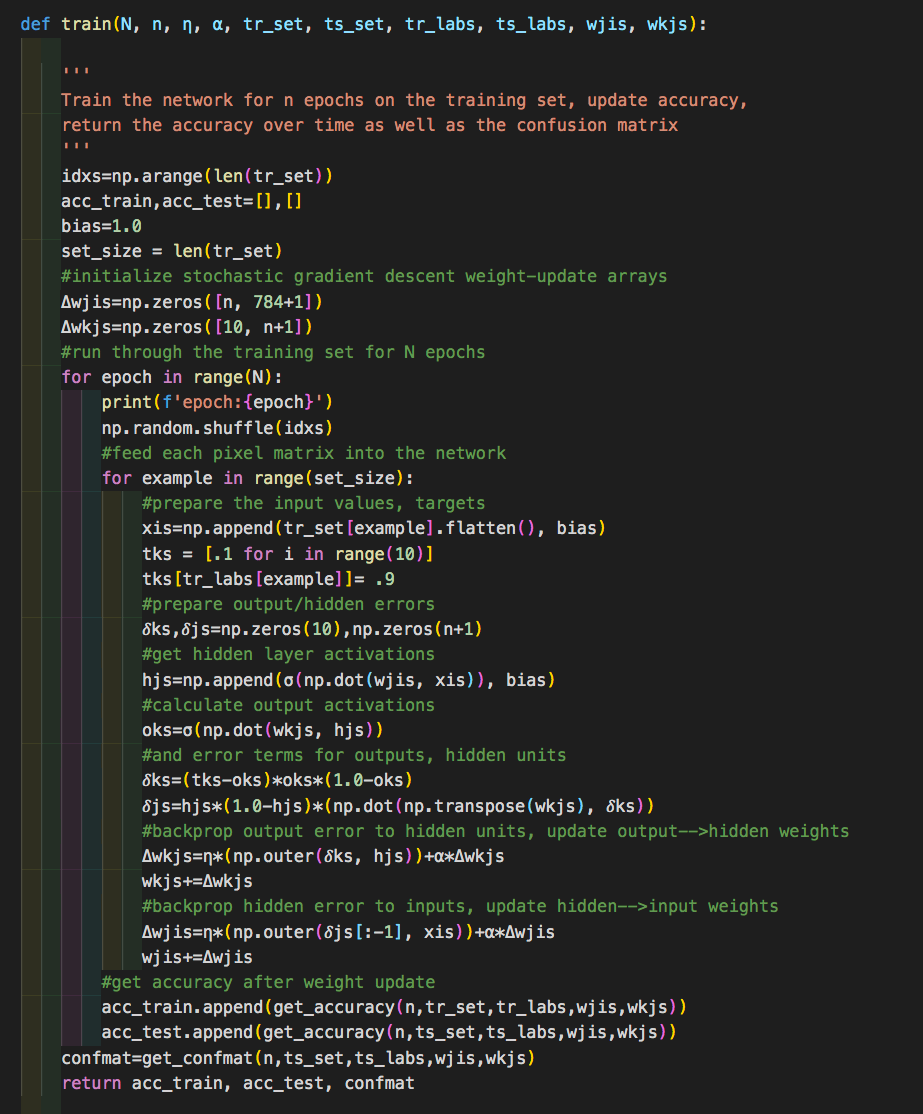

The MNIST dataset of handwritten digits is a pretty classic set for experimenting with perceptron learning. This project compares several different hyperparameters for training on the MNIST data, including changing the number of hidden layer units, the momentum term, and the batch size for testing/training. Training uses forward feeding and back propagation algorithm.

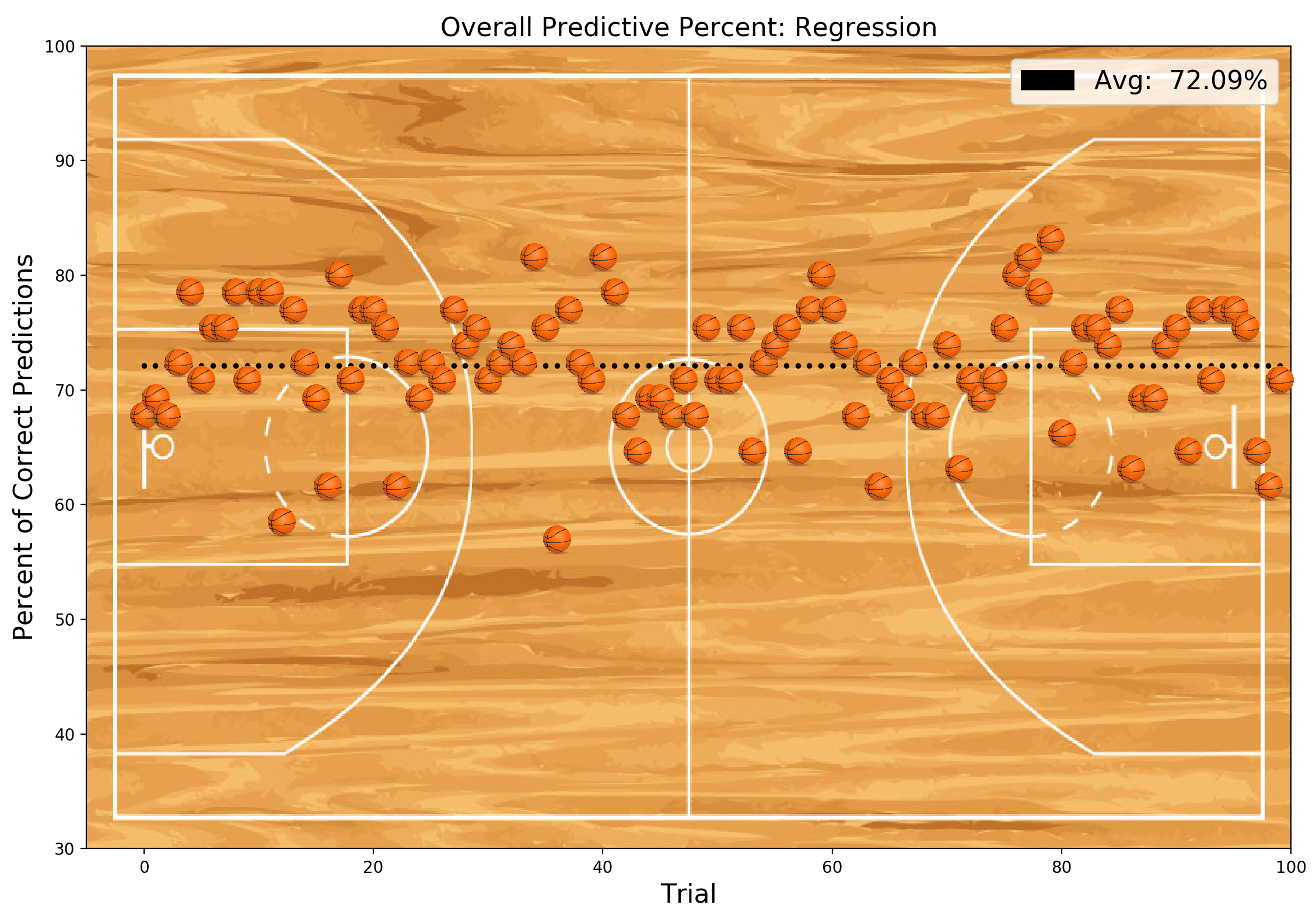

NBA Player Success Predictions

For this project, a team of four students worked on recreating another research project with the same goal. This project compares Logisitic Regression, SVM, and Random Forest algorithms on NBA player data, with the goal of determining whether or not the player, once drafted, will have a successful career.